I used ChatGPT to write a 1,500-word blog post on the keyword "animals that eat plants" and got it to pass AI detection software.

It took me a total of 37 minutes from start to finish.

For preliminary research, I took 200 words of AI content and ran three individual experiments to see how little I could change to pass AI detection:

Experiment 1: Just making the changes Hemingway Editor suggested (6 minutes) — zero impact

Experiment 2: Just changing pronouns and a few sentence structures (2 min 54 seconds) — zero impact

Experiment 3: Adding originality with quotes and personal stories (12 minutes) — 99% probability of passing, success!

I then ran a final experiment, testing a combination of the three on the full 1,500-word article.

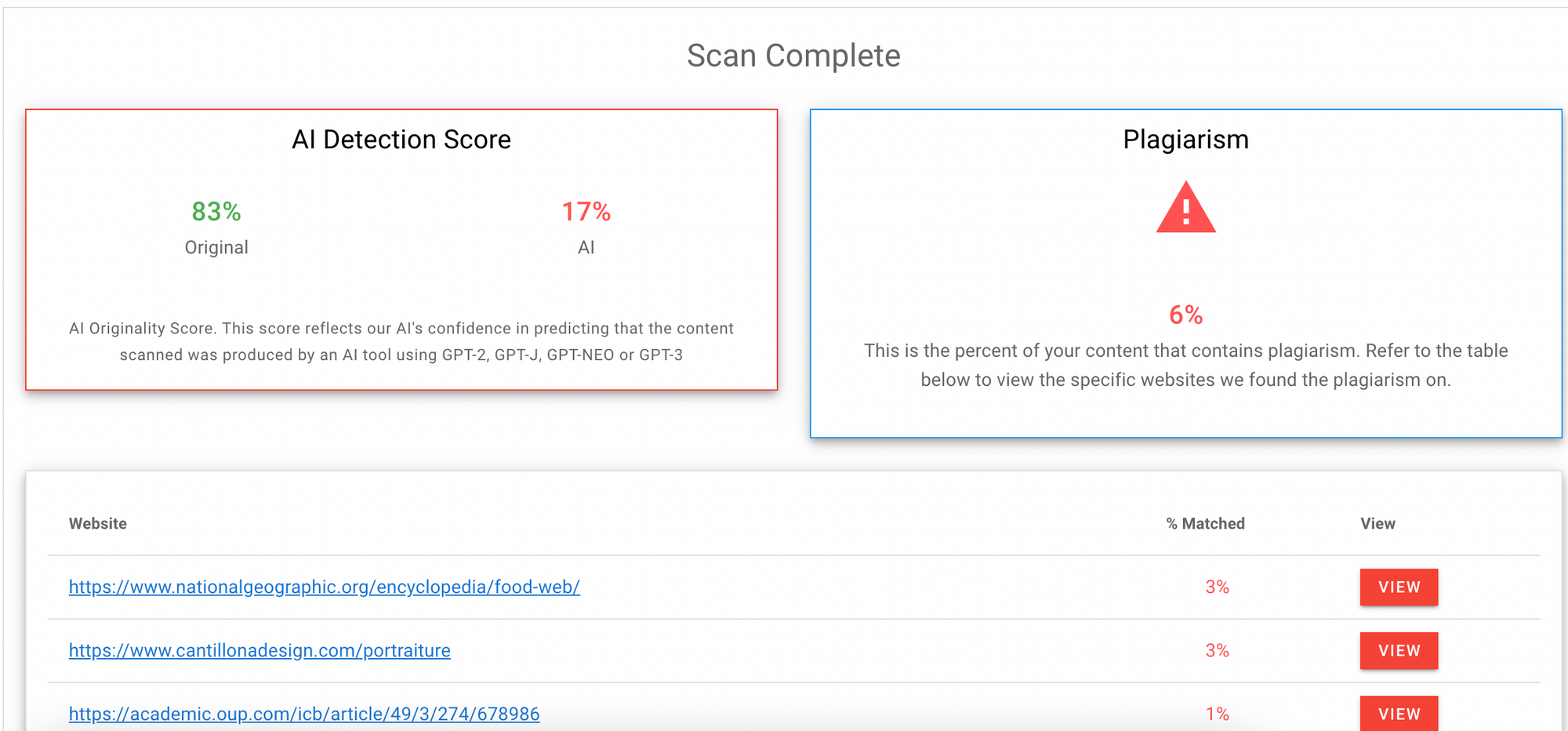

In just 27 minutes of editing, 1,500 words of AI content moved from 0% to 83% probability of being original (measured using the AI detection software Originality.ai).

It's important to note, that this experiment was focused on passing AI detection in as short an amount of time as possible. Whether you'd want to or not is a separate question altogether.

Google may not detect the poor quality of this article, but the reader certainly will. And Google listens to engagement signals.

And, as Seth Godin said in his latest note, "If your work isn’t more useful or insightful or urgent than GPT can create in 12 seconds, don’t interrupt people with it."

—

In the rest of this article, I'll break down the experiments so you can see exactly what I did and what the results look like.

Getting Started—Writing an SEO article with AI

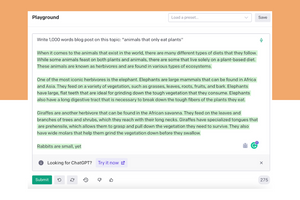

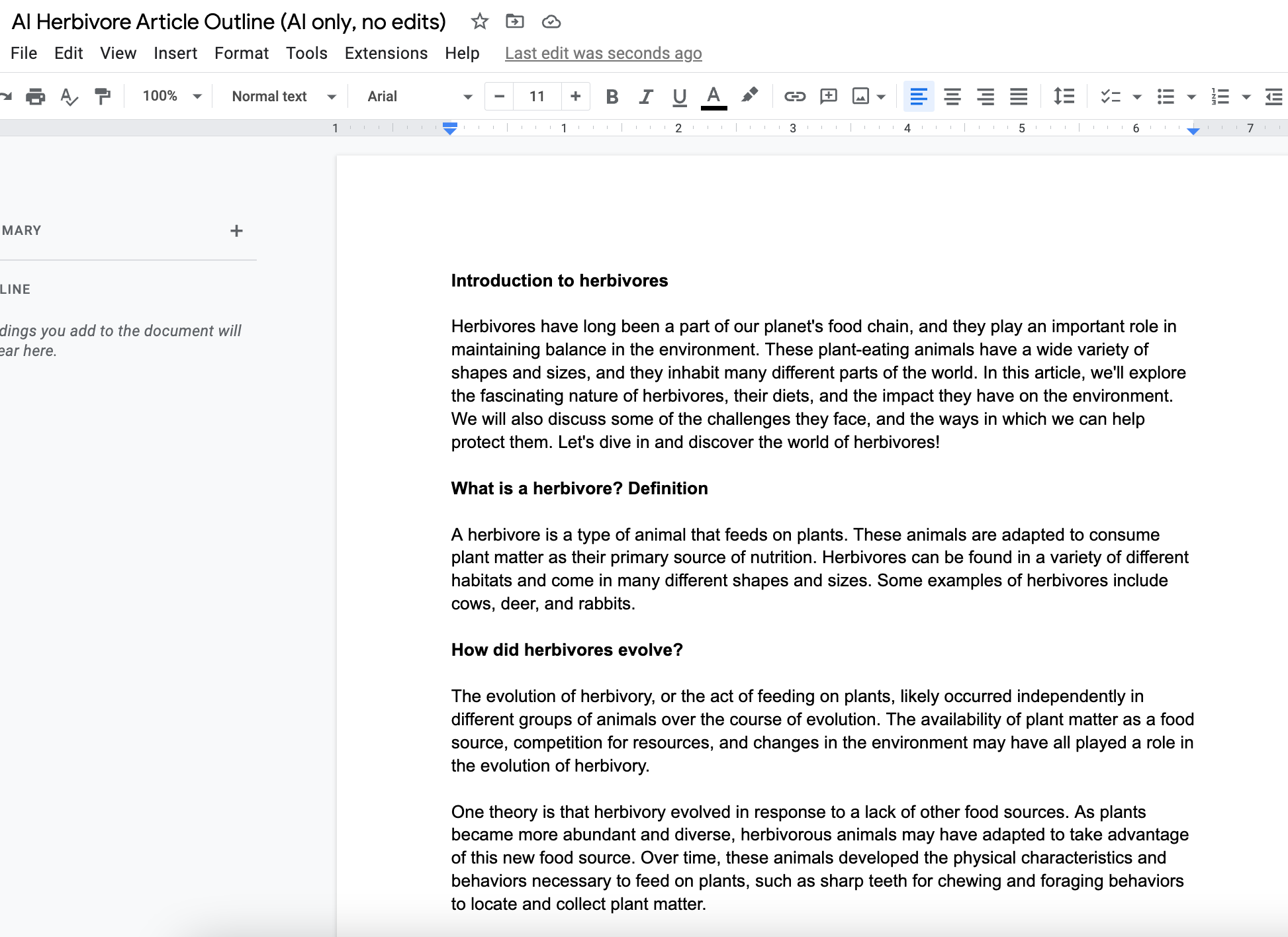

I asked OpenAI to produce a blog post using the keywords "animals that only eat plants" and the prompt "write me an article". It gave me this 194-word piece of text.

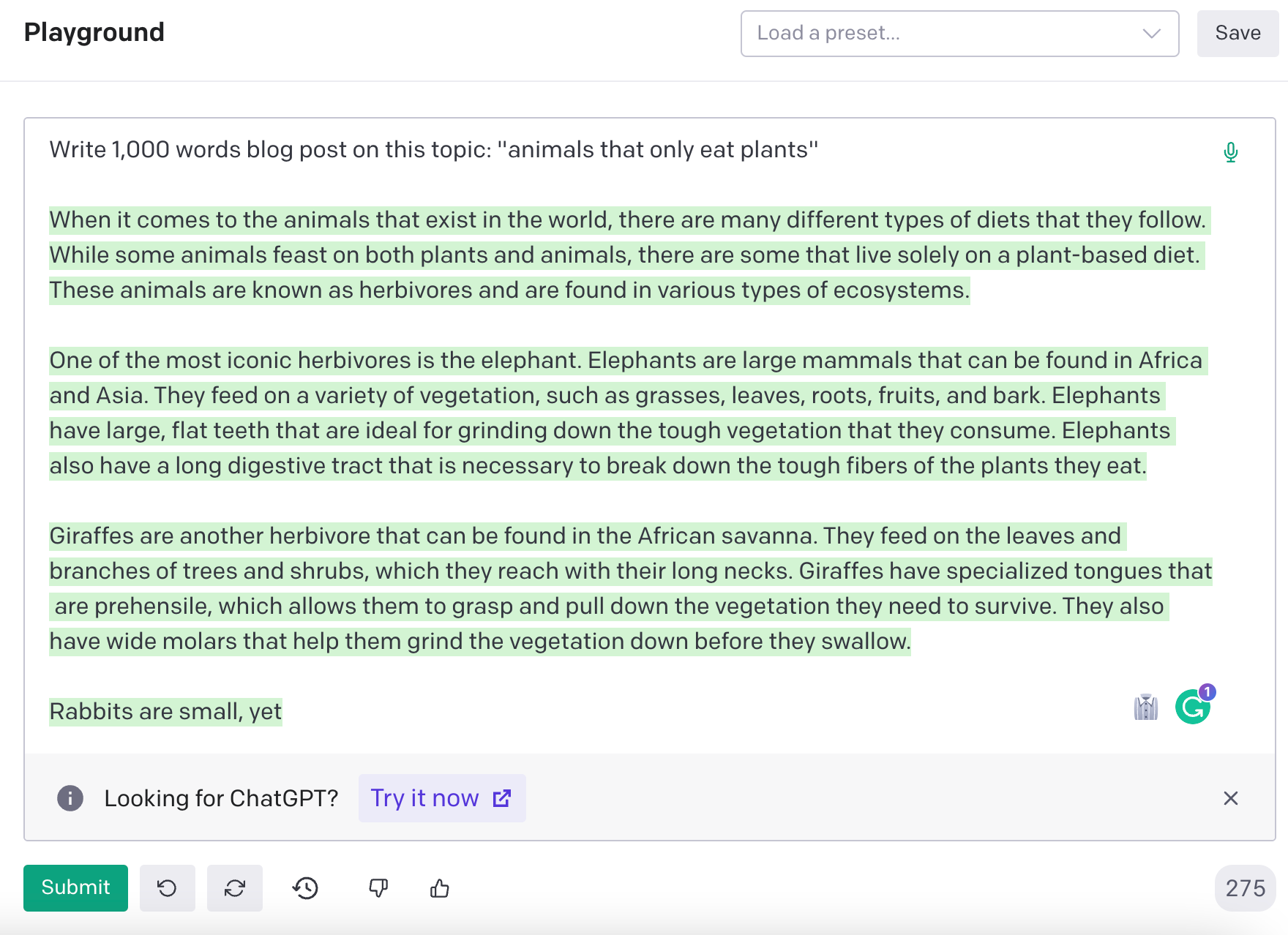

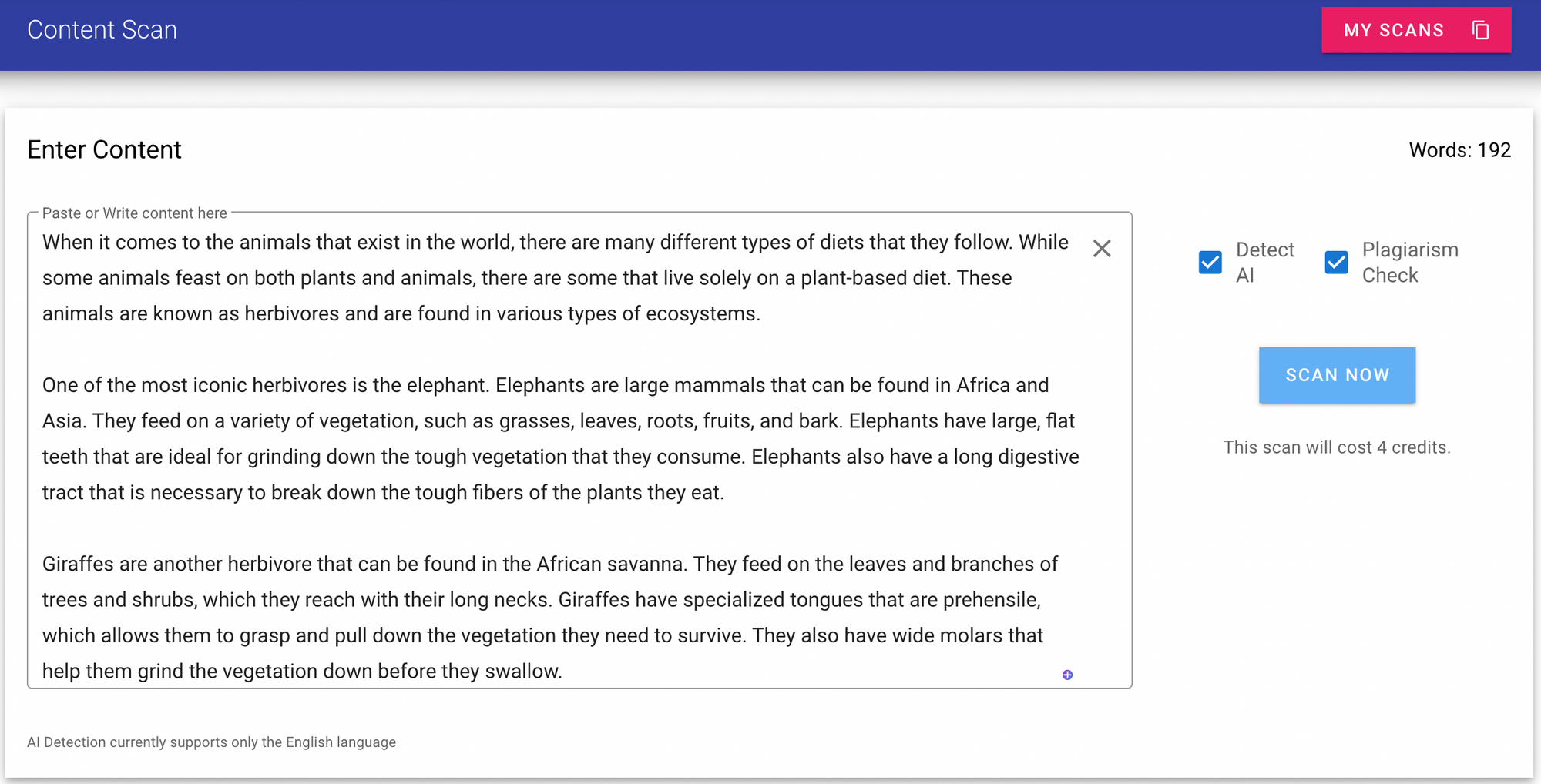

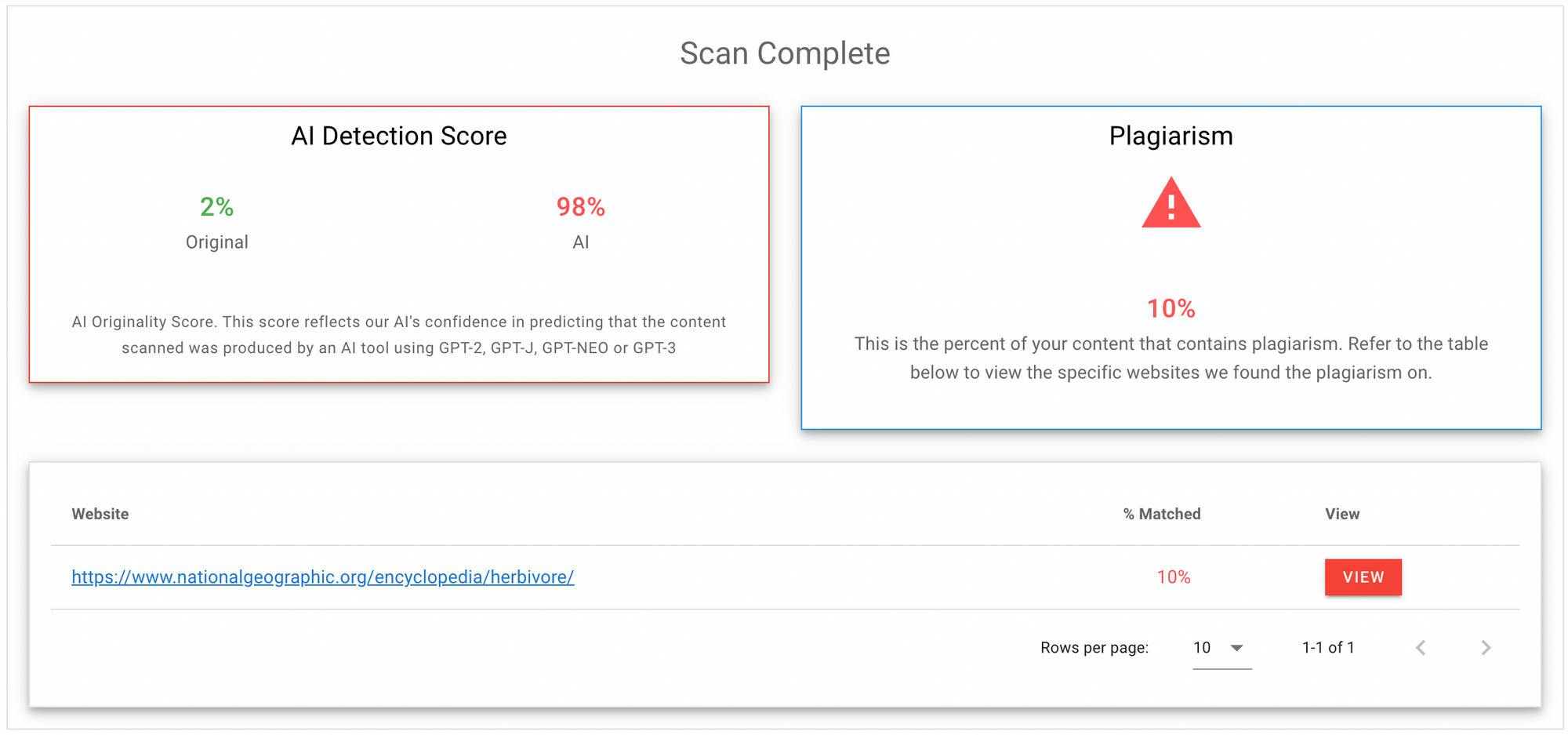

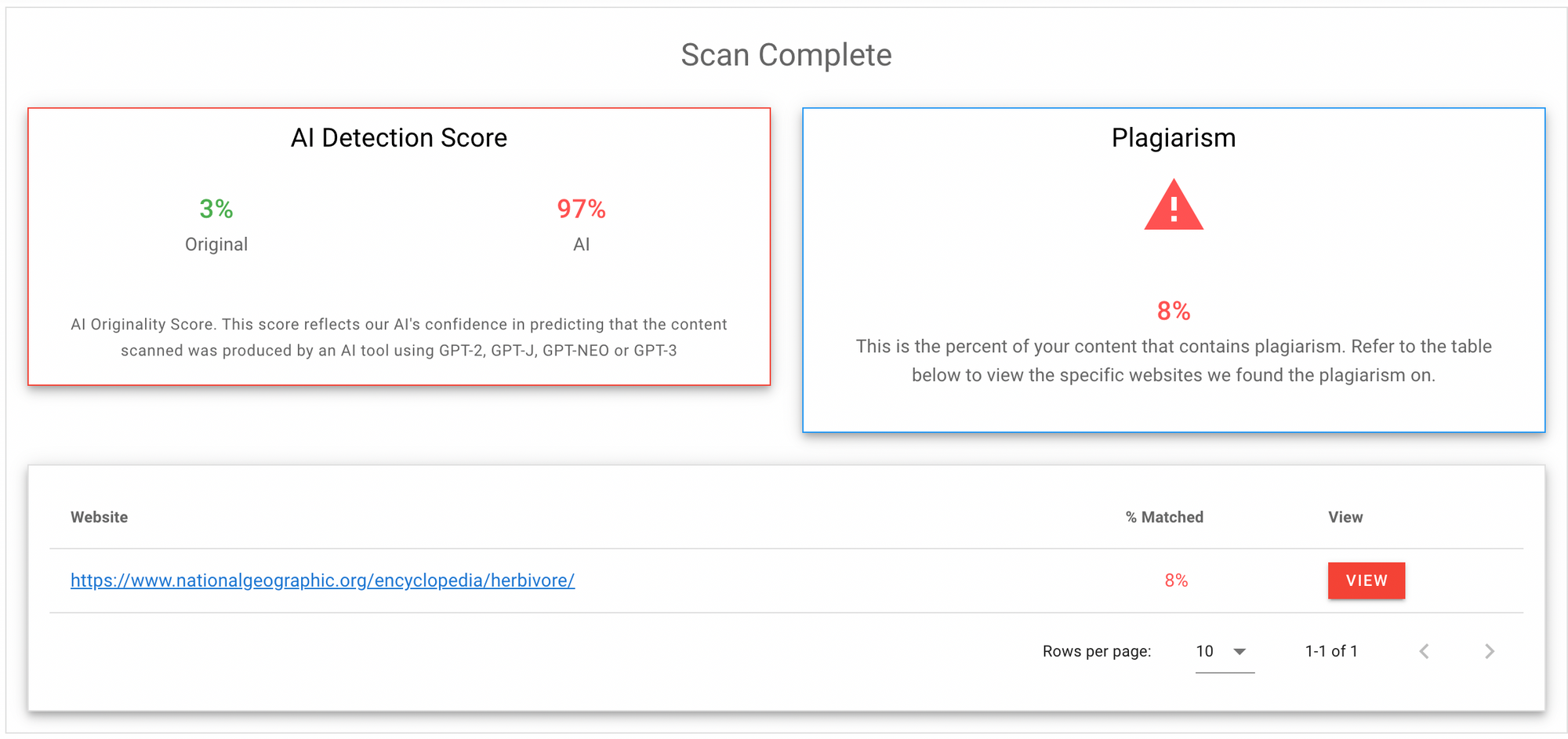

I then bought an account at Originality.ai and tested the content ($10 for 2,000 credits, it's about 2 credits per 200 words).

The results, as expected, show a 0% probability that the content is original.

I strongly encourage anyone outsourcing content to use a tool like this to verify their content is written by a human. It also checks for plagiarism which is pretty useful.

As far as our experiment goes, this set a benchmark of 0% for us to improve upon.

Originality.ai recently confirmed their ability to detect content produced by ChatGPT, GPT-3, and GPT-3.5.

Here's a quote from their recent study:

"We tested 20 articles across GPT-3, GPT-3.5, and ChatGPT and ran each result through Originality.AI to determine the effectiveness of identifying AI content.

The results are that both ChatGPT and GPT-3 can be successfully identified with the existing AI but are superior to GPT-3."

Google considers AI content "spam" so, in its current form, this would be a clear violation of SEO.

Assuming Google uses similar algorithms to detect AI, this content, however, keyword optimized will not be accepted in the long-term.

And, because Google's Helpful Content update is a site-wide signal, publishing too much unedited AI-written content will likely result in your site being classified as unhelpful.

But, the question we're going to address in this article is, how much time will it take me to make this content passable?

How to pass Google's AI content detection in as little time as possible

Four experiments that result in an 83% probability of original content

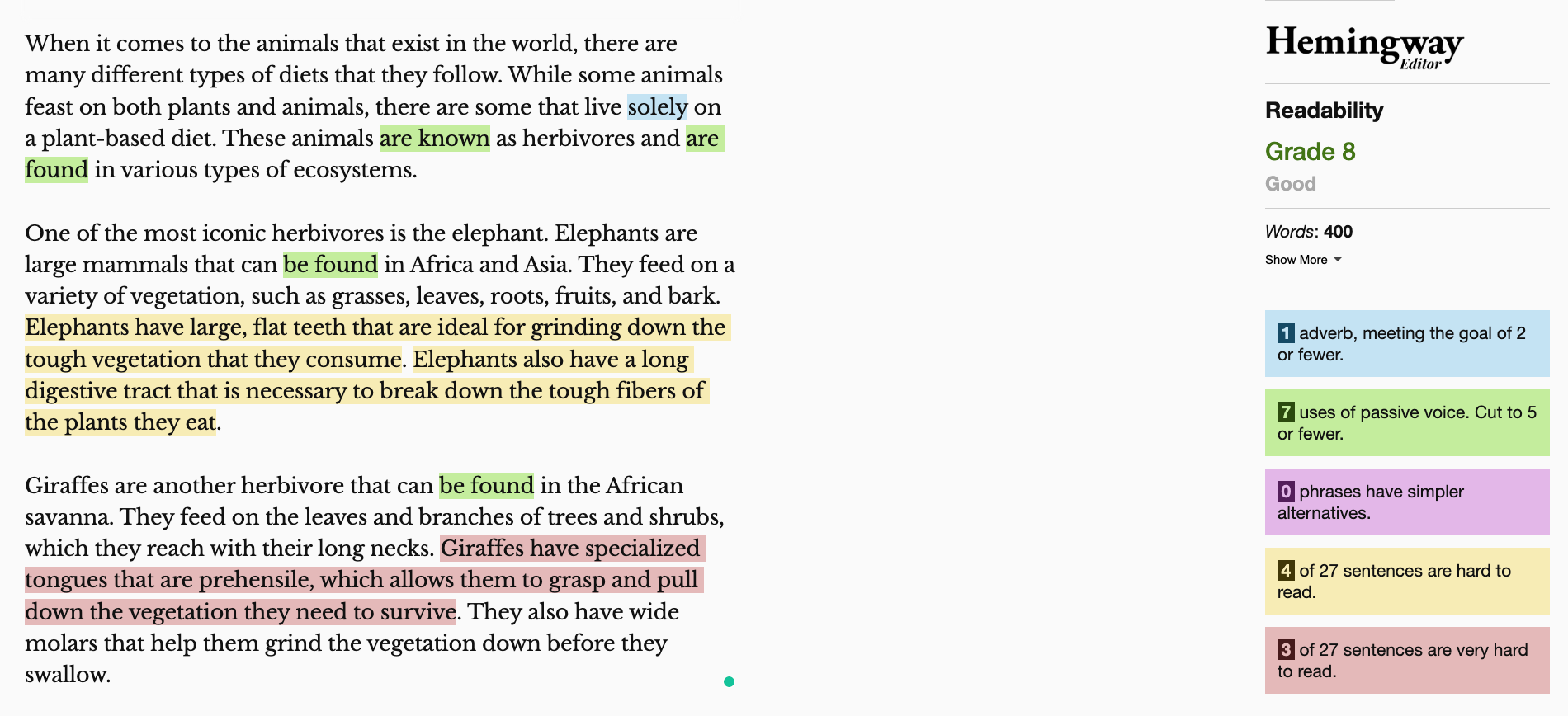

Experiment 1: Just Hemingway Correction

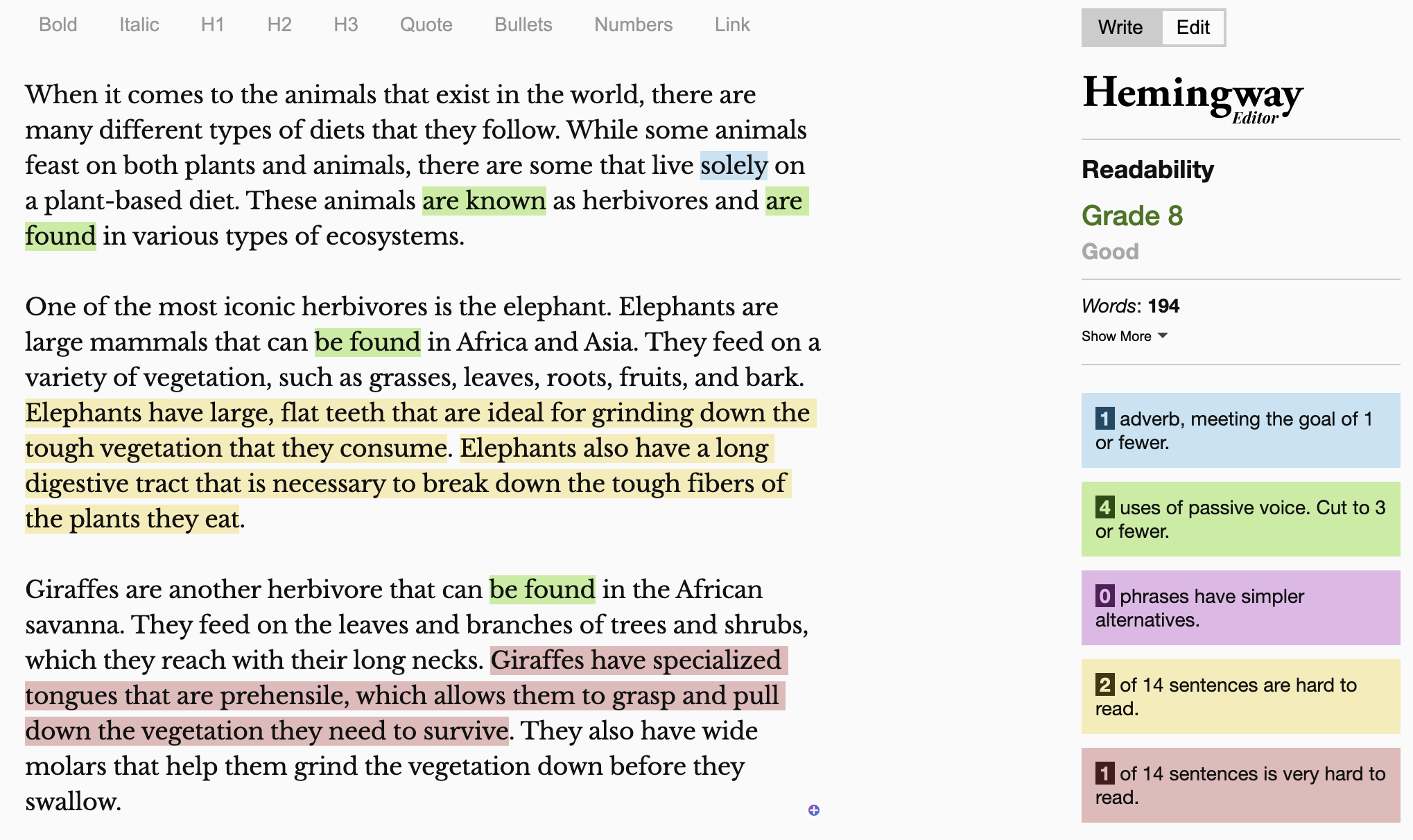

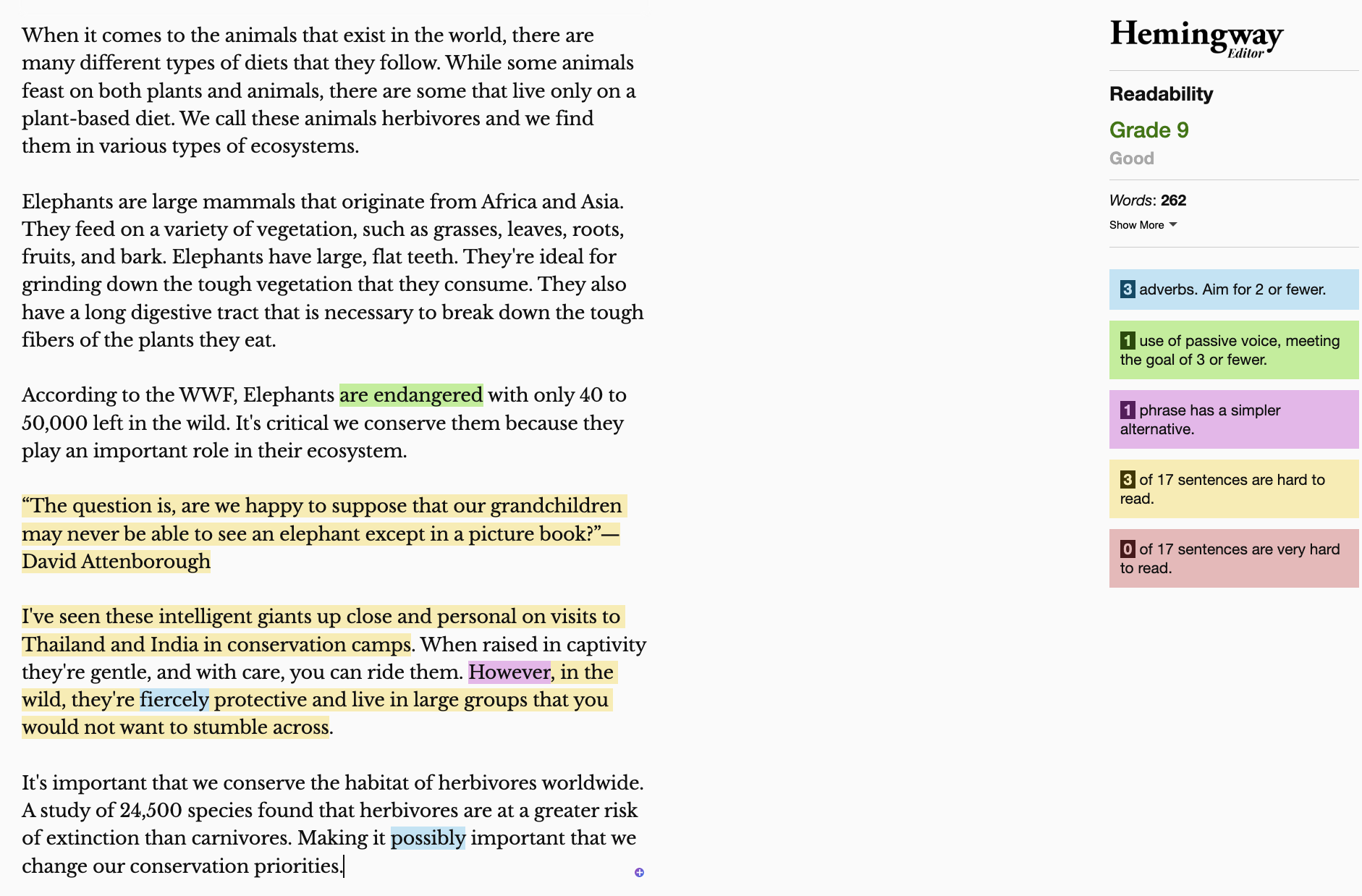

To start off with, I wanted to test just correcting anything flagged by Hemingway Editor, which aims to simplify readability and remove passive voice.

I wanted to start this simply because this really takes minimal brain power and would be an "ideal" solution if it worked.

Here's the AI article in Hemingway:

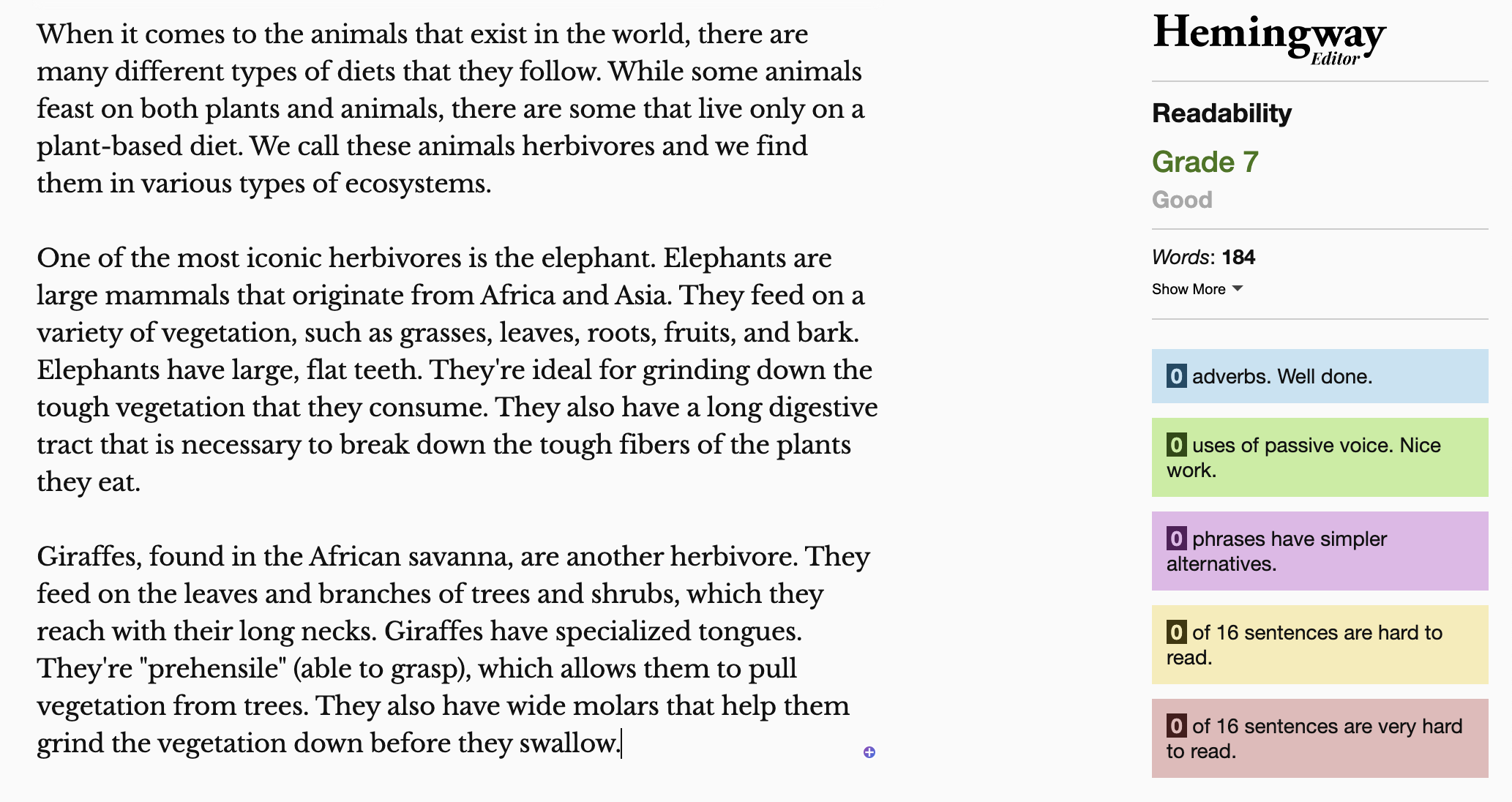

6 minutes and 4 seconds later (which took longer than expected) we've got this, more readable piece of text that passes Hemingway.

And, the experiment gave a negative result. Our score improved, as I would expect, but only to 2%.

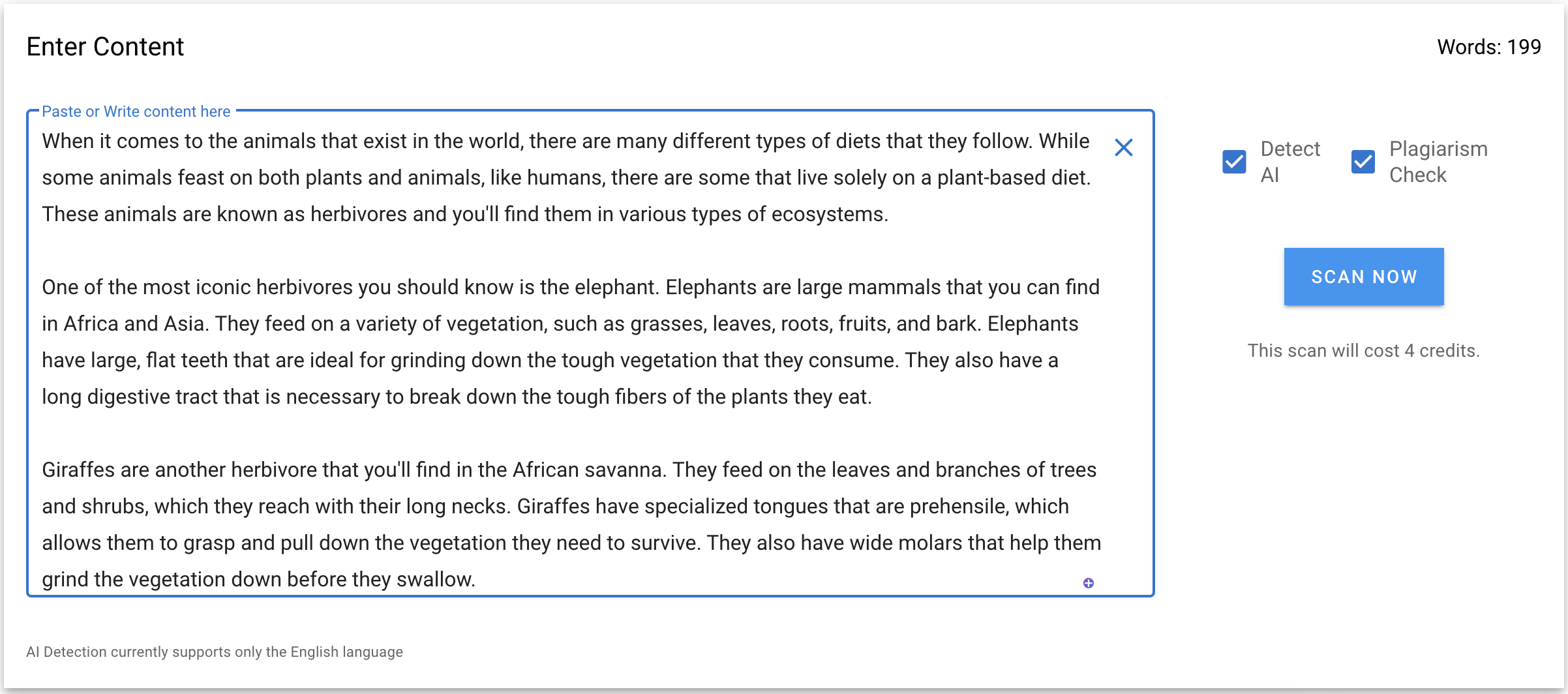

Experiment 2: Changing Pronouns

This post on LinkedIn by Emilia Korczynska gave me an idea.

In this experiment, like in the first, I wanted to test how little I could change. Would adding a few "your" and "you" pronouns to the original text suffice?

Adding these pronouns was surprisingly difficult for an informational article about animals, but I slipped in a few "you should know's" to get this:

It took just 2 minutes, but it promptly failed the AI detection. My guess is that I didn't change enough, but there wasn't enough opportunity to.

I decided to take another 54 seconds to also improve sentence structure, and I made a couple of other tiny changes to improve the credibility of the piece.

This still didn't overcome AI detection software, but we are at 3%. A small improvement!

Experiments 1 and 2, while unsuccessful, prove that making minor changes that take under three minutes is not enough to pass AI detection software.

We need to go deeper.

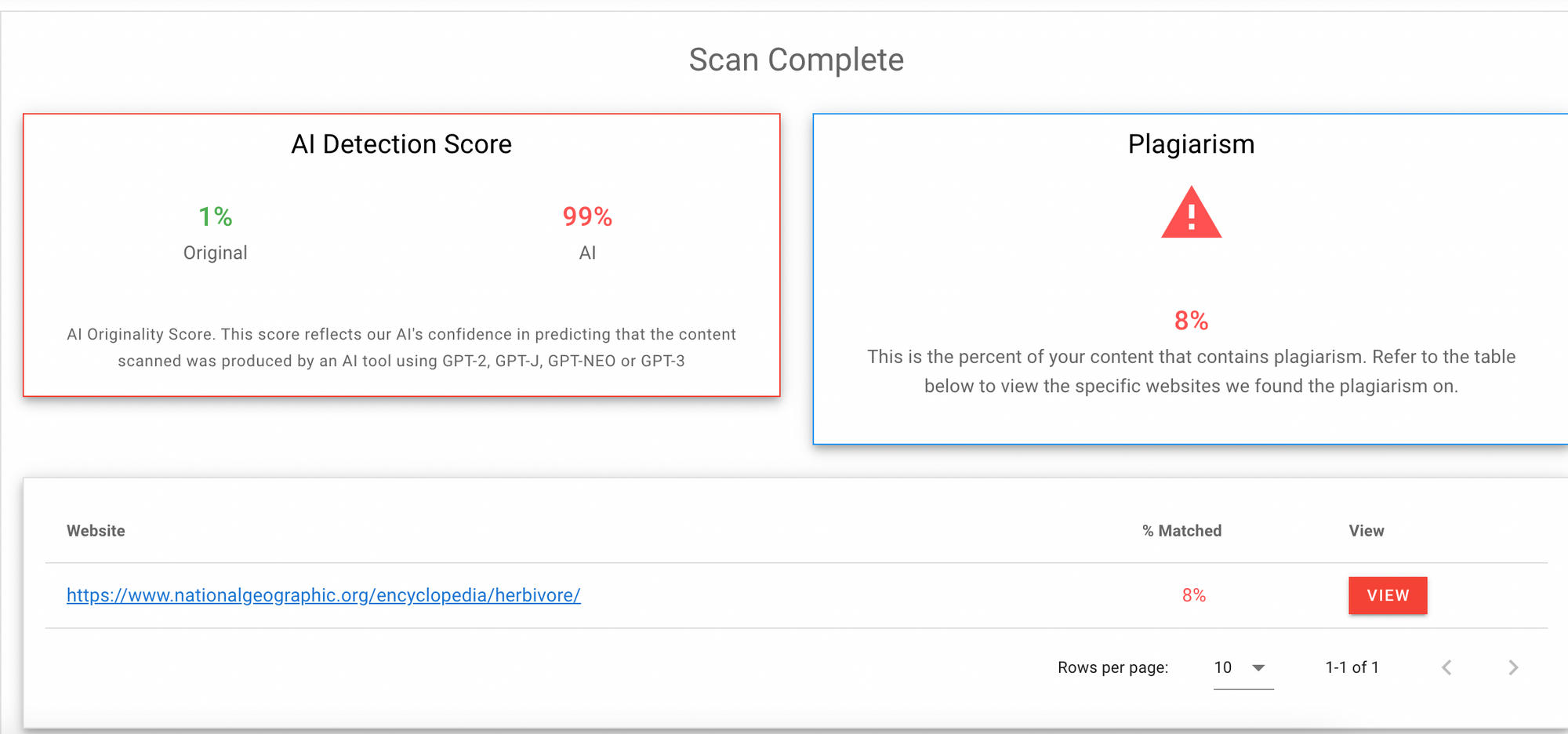

Experiment 3: Adding Originality

Good content has an opinion, shows expertise, and conveys personality. Three things that AI struggles to bring to the table naturally.

In an effort to overcome AI detection, I sprinkled some in myself.

1. I added our (made-up) brand angle to show the reader what we believe

In this case, I imagined I was writing this content for an animal conversation charity.

2. I added an expert quote to add a little sizzle

Yep, I just Googled "David Attenborough quote on elephants"

3. I included a personal story to make this piece unique

I've seen elephants in India and thought I'd throw that in there.

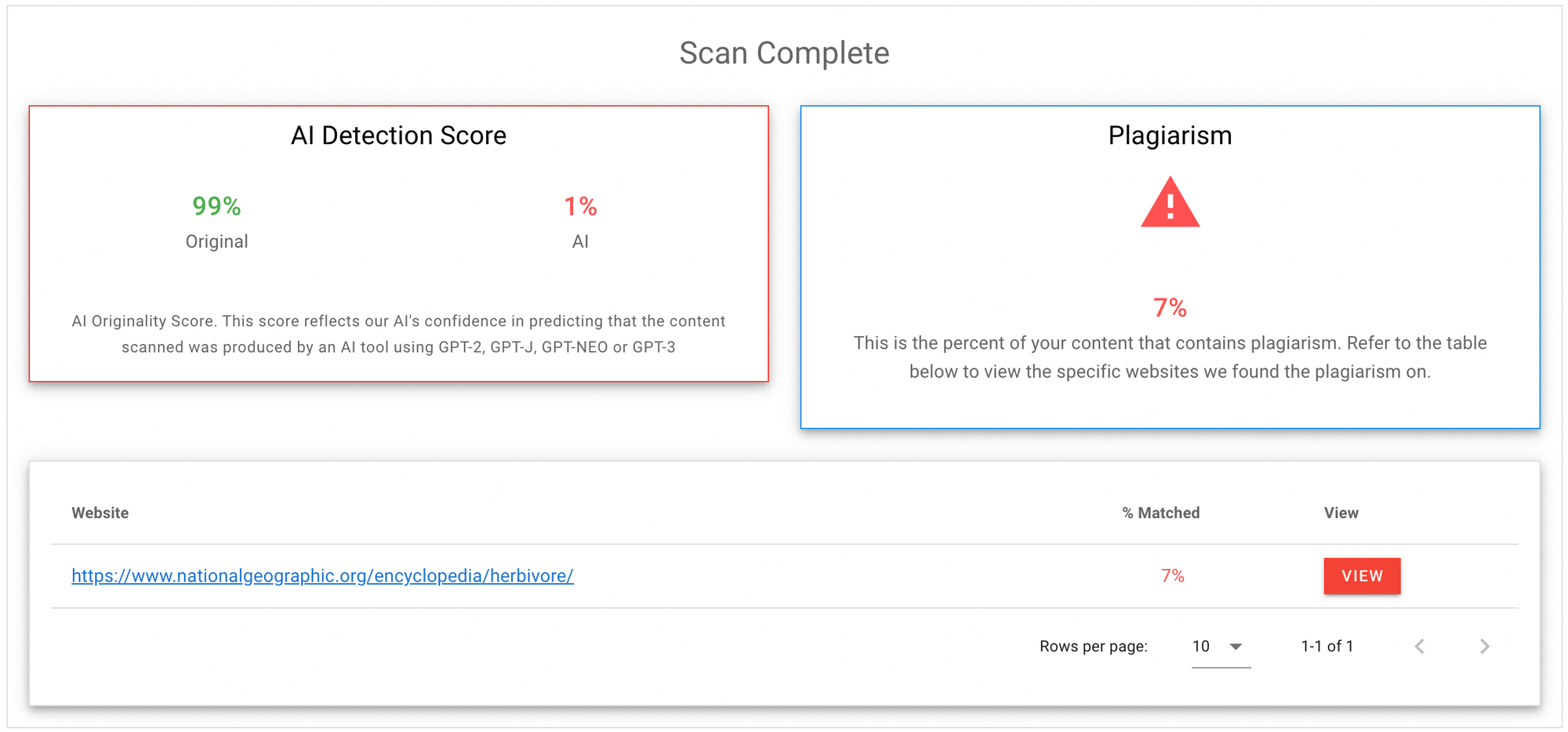

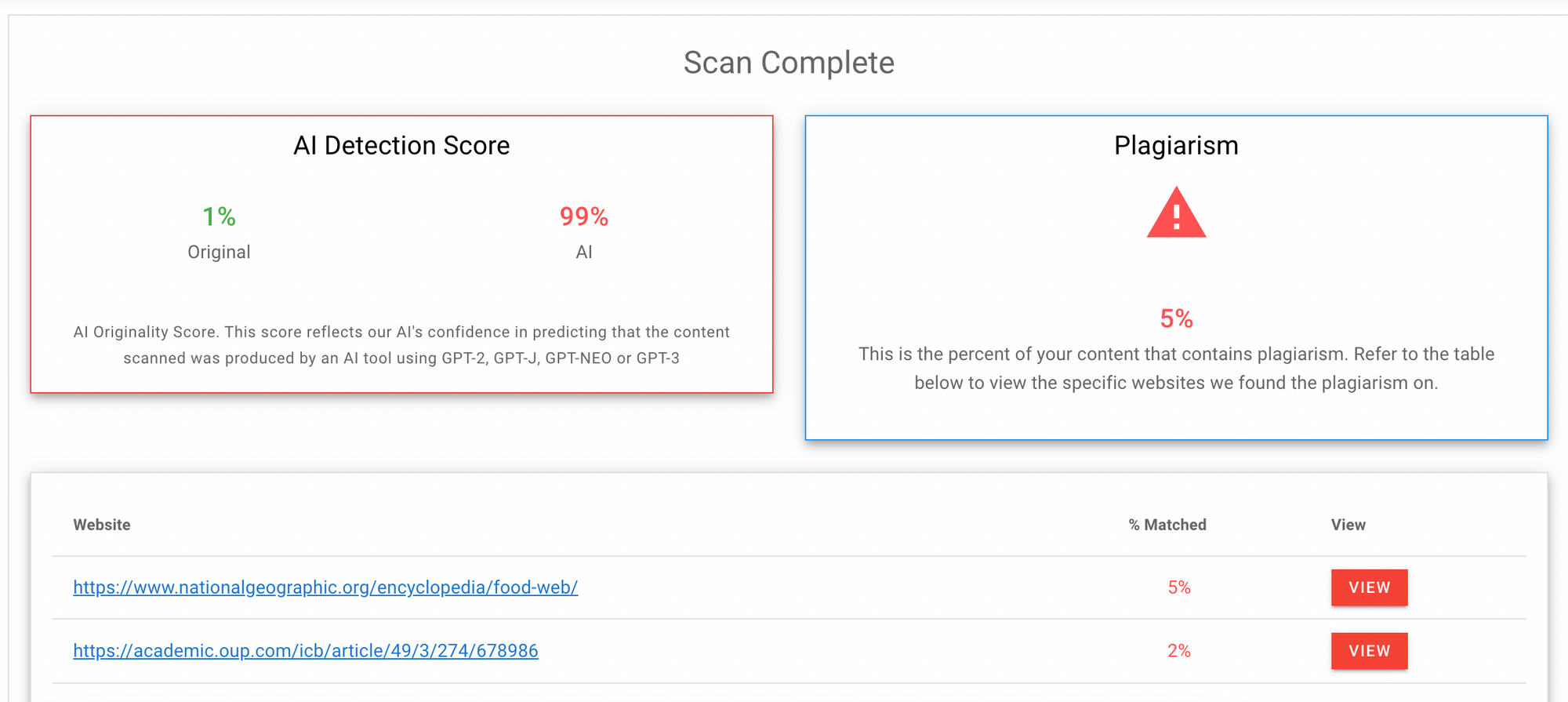

Here's the result. It took me 12 minutes and 4 seconds to add, most of which was spent looking for quotes and statistics.

These 100 additional words paid off. The content is now considered 99% original, despite it perhaps being 40-50% original.

This seems like a success, although, I wonder if it would have taken me less than 12 minutes overall to write 262 words from scratch.

While I had to spend time considering what to edit, the AI took most of the cognitive load away from me upfront. It decided we'd be talking about elephants and it defined herbivores for me.

It was hugely useful that OpenAI gave me the bones in a few seconds.

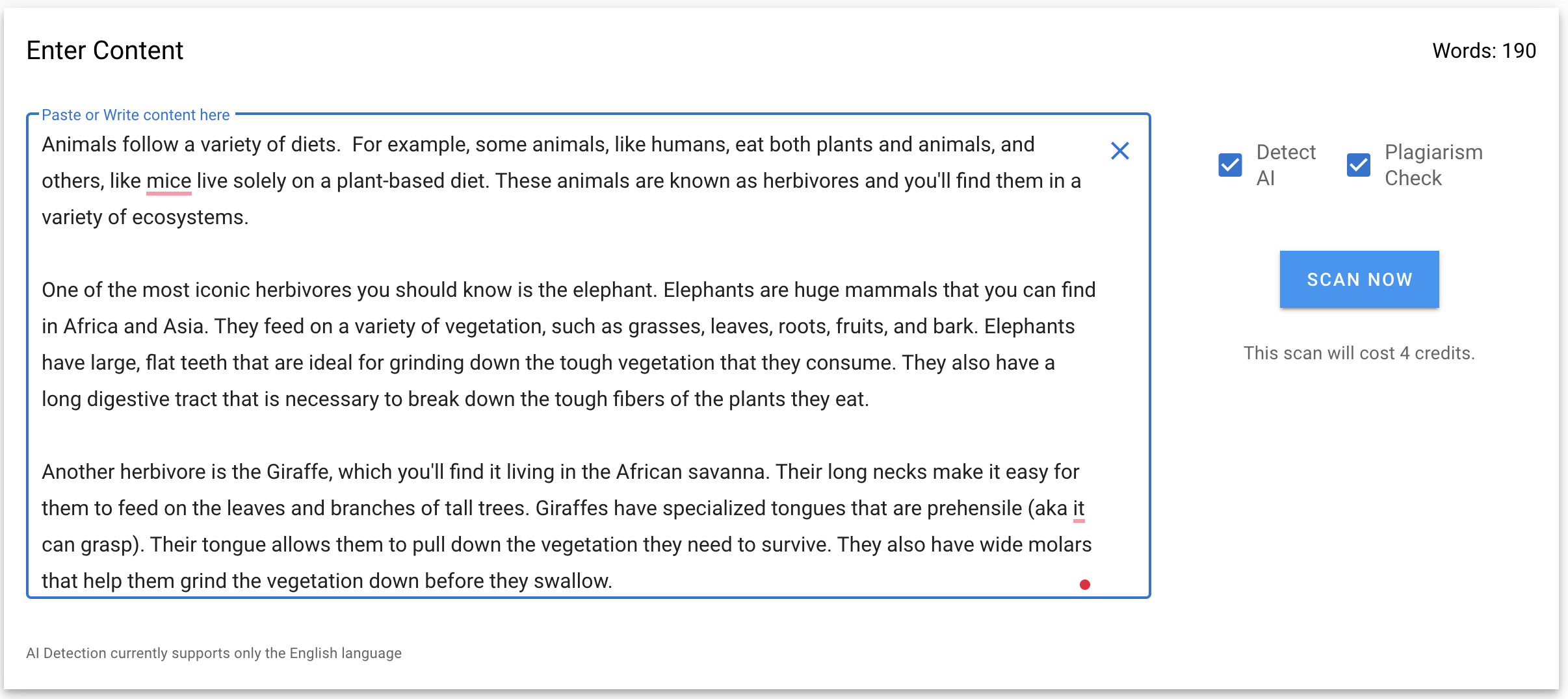

Experiment 4: Extrapolating to 1,500 words

So, in experiment three we found that for 100-200 words, it would take about 12 minutes to pass an AI-detection tool checker.

A clunky way to extrapolate would be to multiply that by 10, and assume a 1,500-word article would take 120 minutes—2 hours in total to create.

But, what if the relationship wasn't linear? Instead, what if a few small changes would make the entire article pass the originality checker?

I was determined to see how little I would have to change to make 1,500 words pass AI detection.

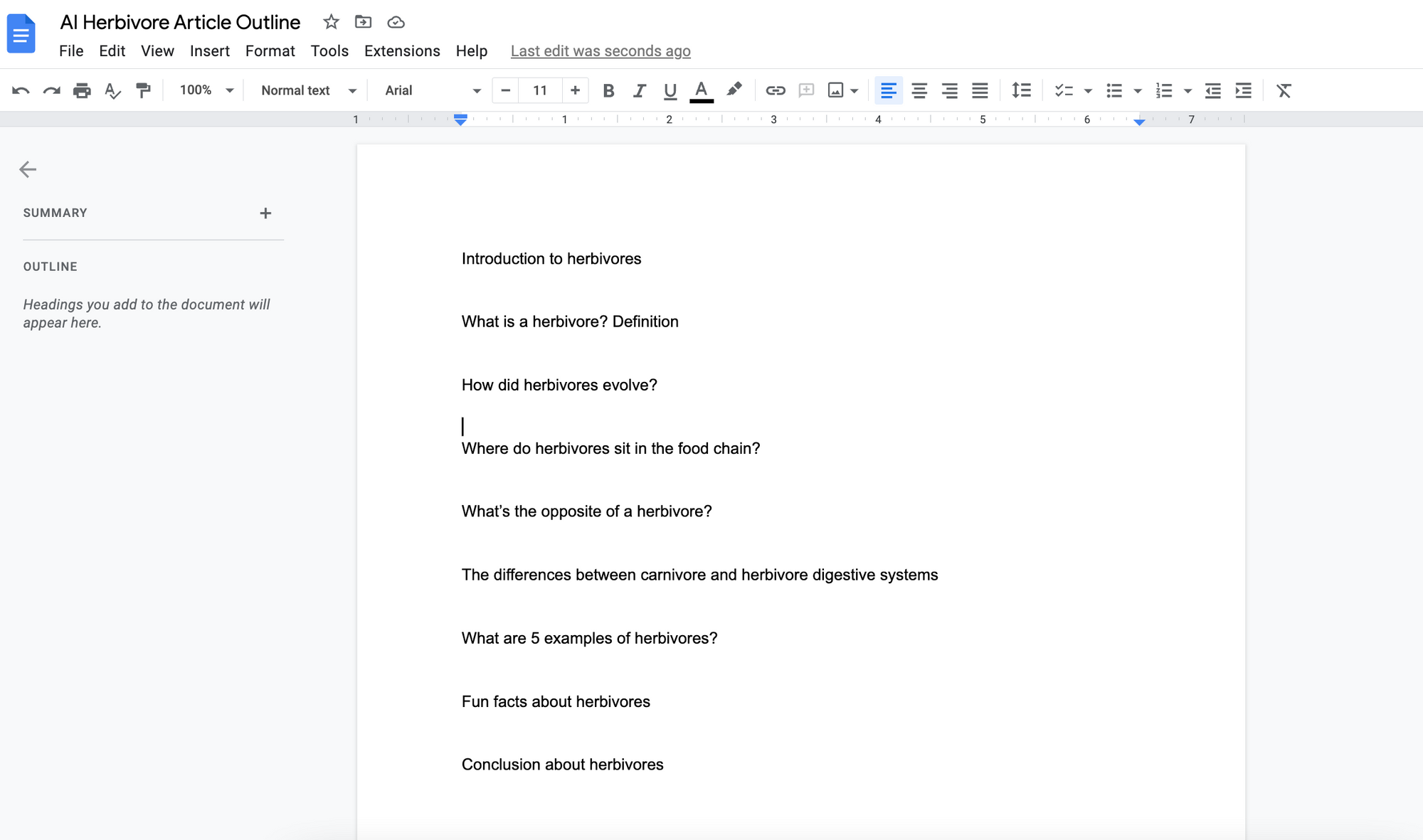

Here's a basic outline I put together in a couple of minutes, created by simply pulling headlines and FAQs from existing SERPs.

I then asked ChatGPT to write each of these sections until we got to 1,500 words. And the results were this article.

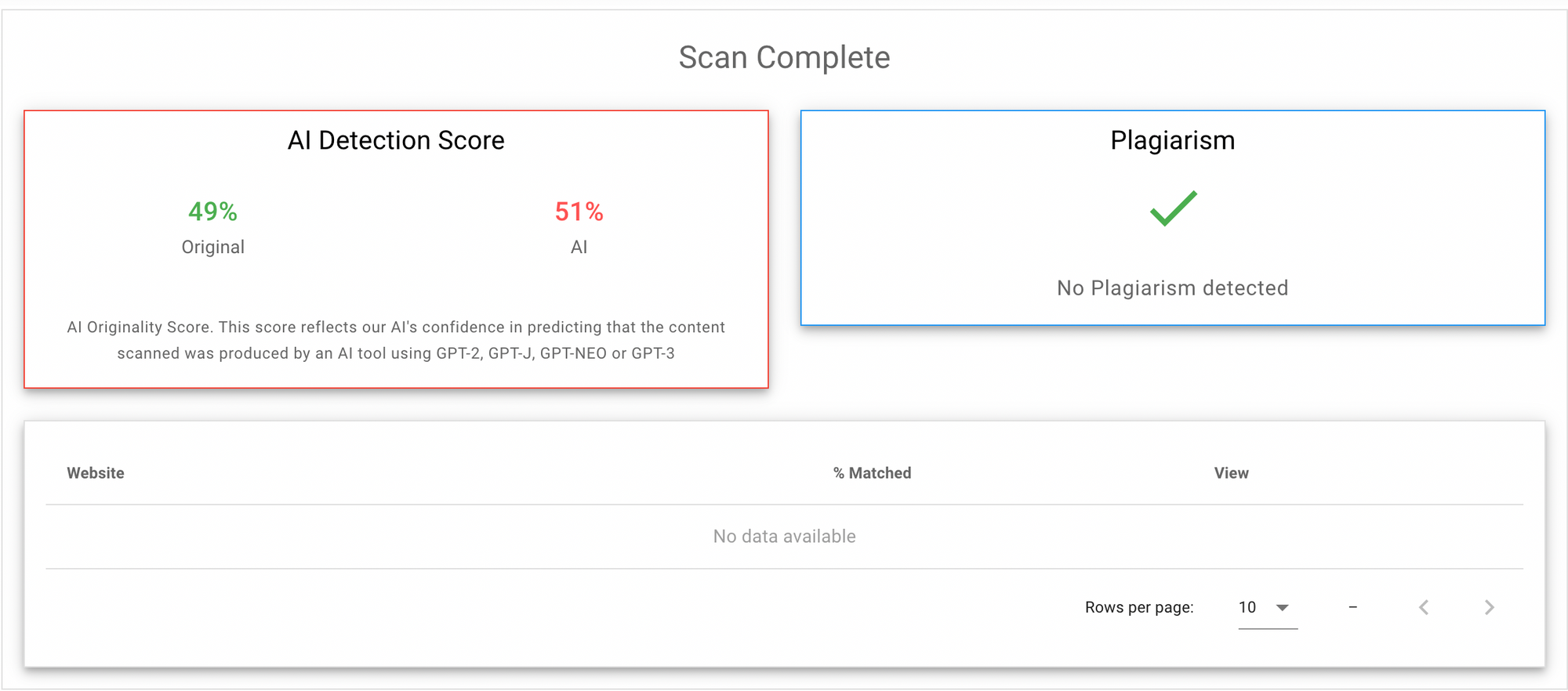

I copy & pasted it into Originality.ai and, as suspected, here are the results:

Now, let's add the exact same originality (12 minutes, same quotes, and a few small changes) as in my first text.

Adding just the same 155 words of "originality", brought these results:

Showing that in 12 minutes, a 1,500-word article would be considered 49% original.

Already, that's pretty good.

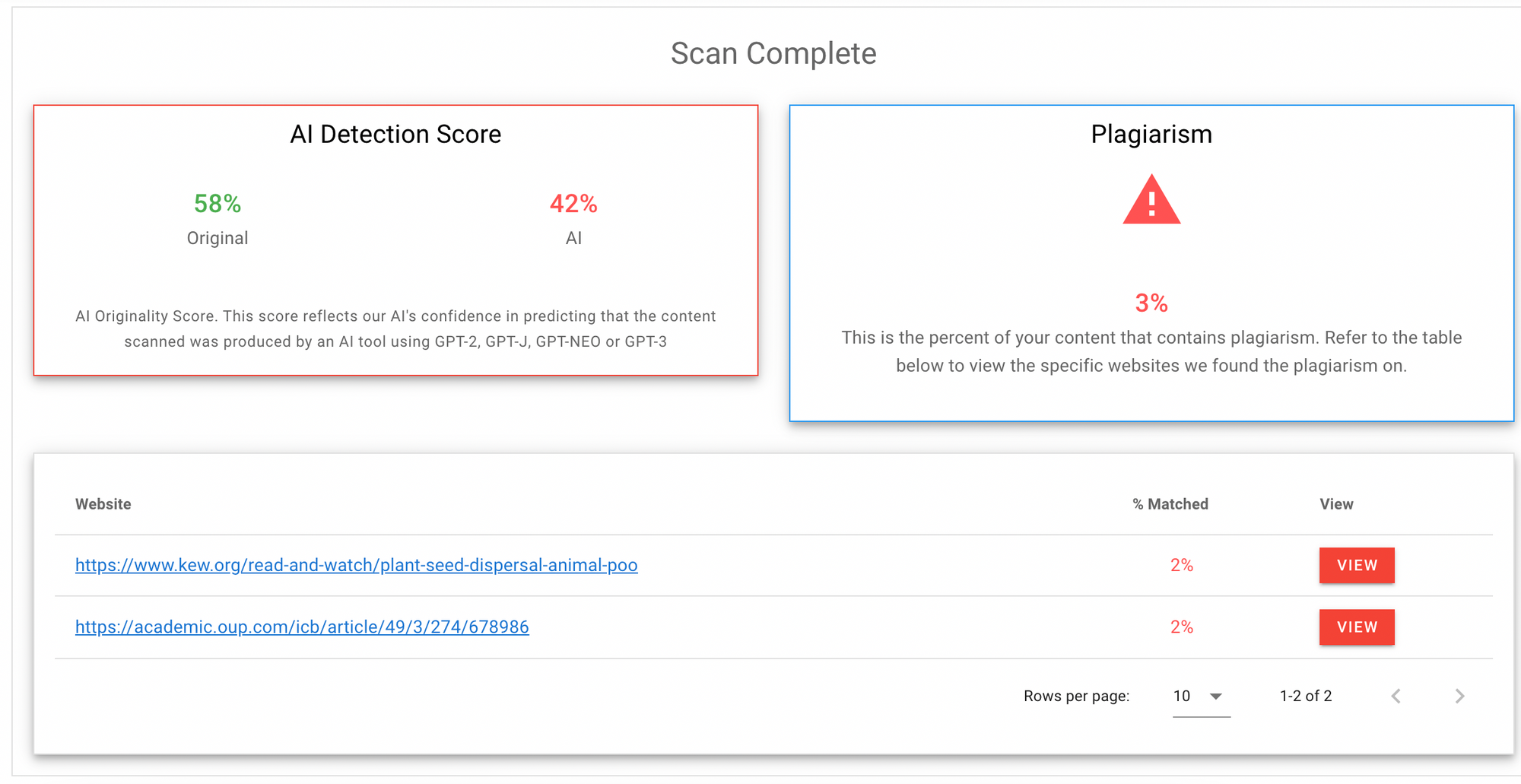

In 5 more minutes, rewriting a sentence here and there, I pushed it up to 58%:

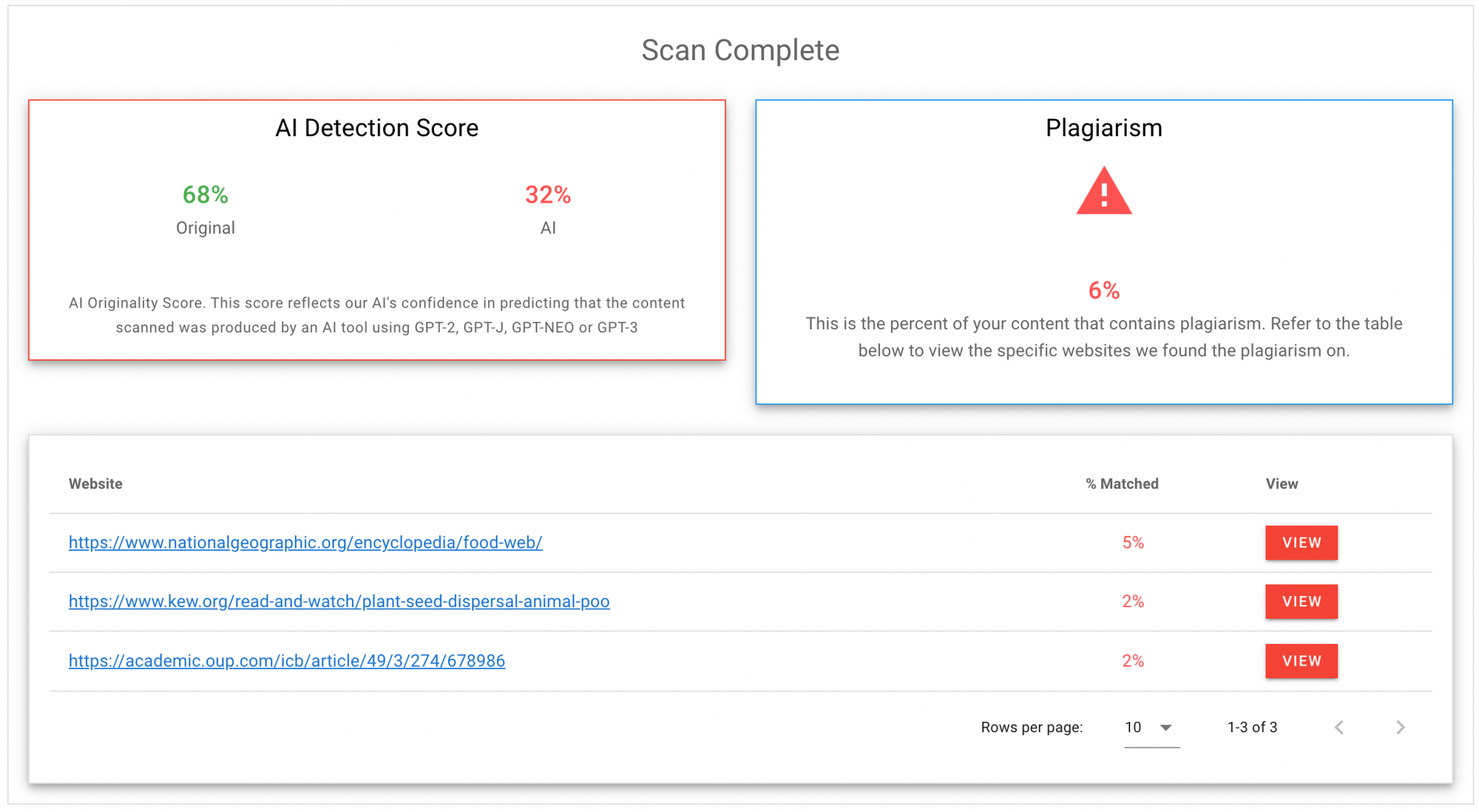

With another 5 minutes of similar edits, I got to 68%.

And, yet another 5 minutes of adding and rewriting sentences, I got to 83% probability of original content.

I'm gonna call that a win and go to bed (literally, it's late over here).

You can review the final article here (changes are highlighted in green).

In total, I added or changed around 500 words (some of which included quotes found on the internet) in a total of 27 minutes.

That means 33% of this article was original, and AI wrote the rest. Together, we had an 83% probability of being an original article.

The total time it took was around:

- 2 minutes whipping up a brief

- 5 minutes asking AI to write it

- 27 minutes making it pass AI detection to an 83% possibility

Total time to write an acceptable article: 34 minutes.

Note: Please bear in mind my goal was not to create a compelling article, it was to make a passable piece of content for SEO.

Final words, from Seth Godin

I want to end this experiment with some wise words from Seth Godin on AI content.

"Technology begins by making old work easier, but then it requires that new work be better."—Seth Godin

With the latest improvements in GPT3—anyone can write a student-level article on almost any topic.

Creating lots of mediocre content is easier than ever, it's instant. Creating average content is, therefore, utterly pointless.

"If your work isn’t more useful or insightful or urgent than GPT can create in 12 seconds, don’t interrupt people with it."—Seth Godin

In 37 minutes, I've made a piece of AI content that's undetectable. But, to what end?

We've created an average piece of content, with some level of originality and some facts that one might find useful.

It's likely this article could rank well in Google. But is it content that would earn a following? Is it content that would convert a visitor?

And, importantly, if someone else could write the same article as you in 37 minutes, how long is your success really going to last?